Scalable 3D Video of Dynamic Scenes

The Visual Computer, vol. 21, no. 8–10, pp. 629–638, 2005.

|

|

|

|

||||||

|

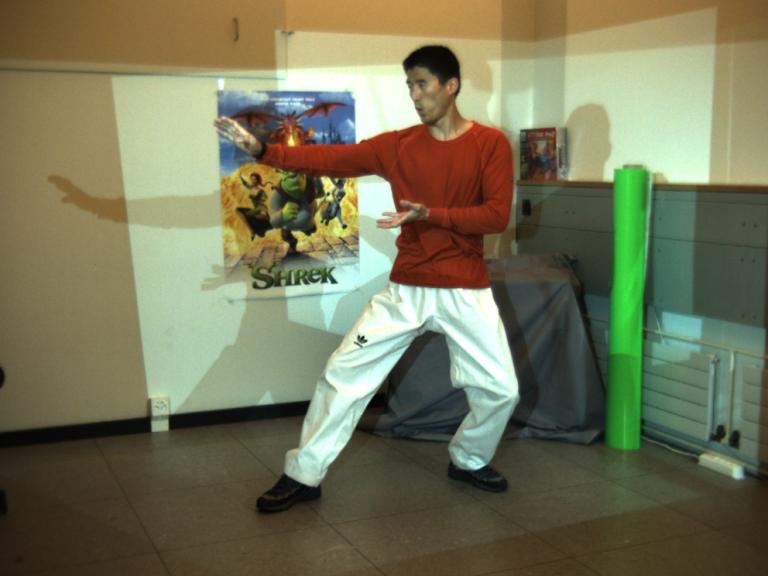

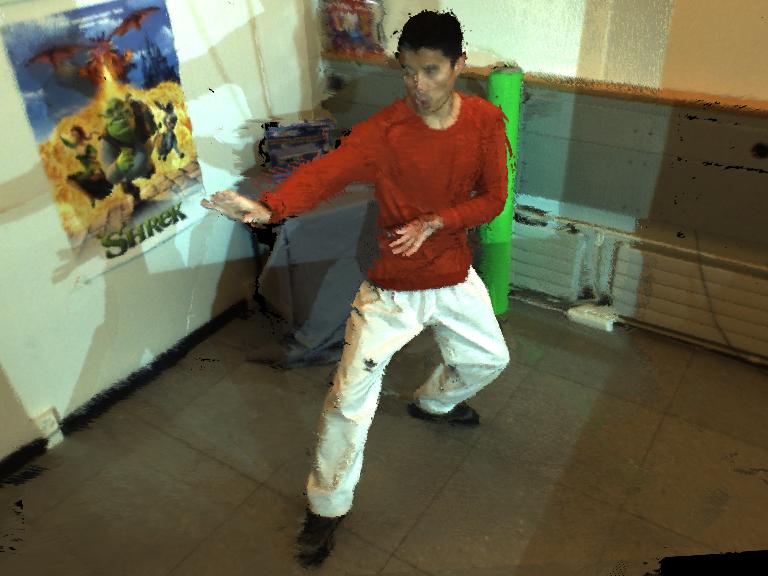

AbstractIn this paper we present a scalable 3D video framework for capturing and rendering dynamic scenes. The acquisition system is based on multiple sparsely placed 3D video bricks, each comprising a projector, two grayscale cameras, and a color camera. Relying on structured light with complementary patterns, texture images and pattern-augmented views of the scene are acquired simultaneously by time-multiplexed projections and synchronized camera exposures. Using space-time stereo on the acquired pattern images, high-quality depth maps are extracted, whose corresponding surface samples are merged into a view-independent, point-based 3D data structure. This representation allows for effective photo-consistency enforcement and outlier removal, leading to a significant decrease of visual artifacts and a high resulting rendering quality using EWA volume splatting. Our framework and its view-independent representation allow for simple and straightforward editing of 3D video. In order to demonstrate its flexibility, we show compositing techniques and spatiotemporal effects. |

|||||||||

Available Files[BibTeX] [DOI] [PDF] |

|||||||||